Running a LLM on local computer

Can you have a Chat-GPT experience hosted fully on your laptop? Yes! And it is straightforward to setup. There is a number of open source LLM models available, that are alternatives to GPT4 model created by OpenAI (model behind famous Chat-GPT).

This article uses Llama LLM models provided by Meta, as an example.

How to get the model?

Let's follow that tutorial (or version for Windows or Linux depending on what you use): https://www.llama.com/docs/llama-everywhere/running-meta-llama-on-mac/

In a nutshell, there are only 2 steps that you need do to convert your computer into a conversational AI friend:

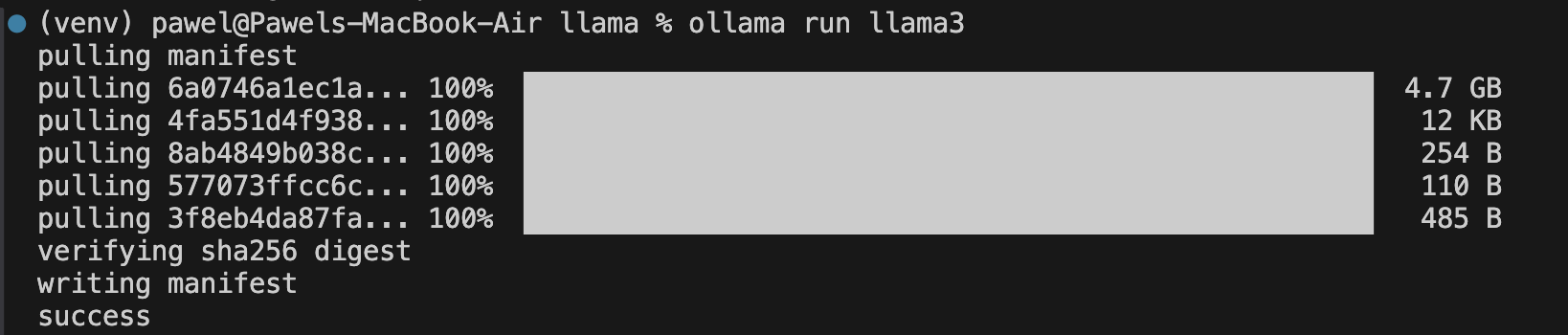

- Download ollama

- Run the model:

And that's it!

How to use the model?

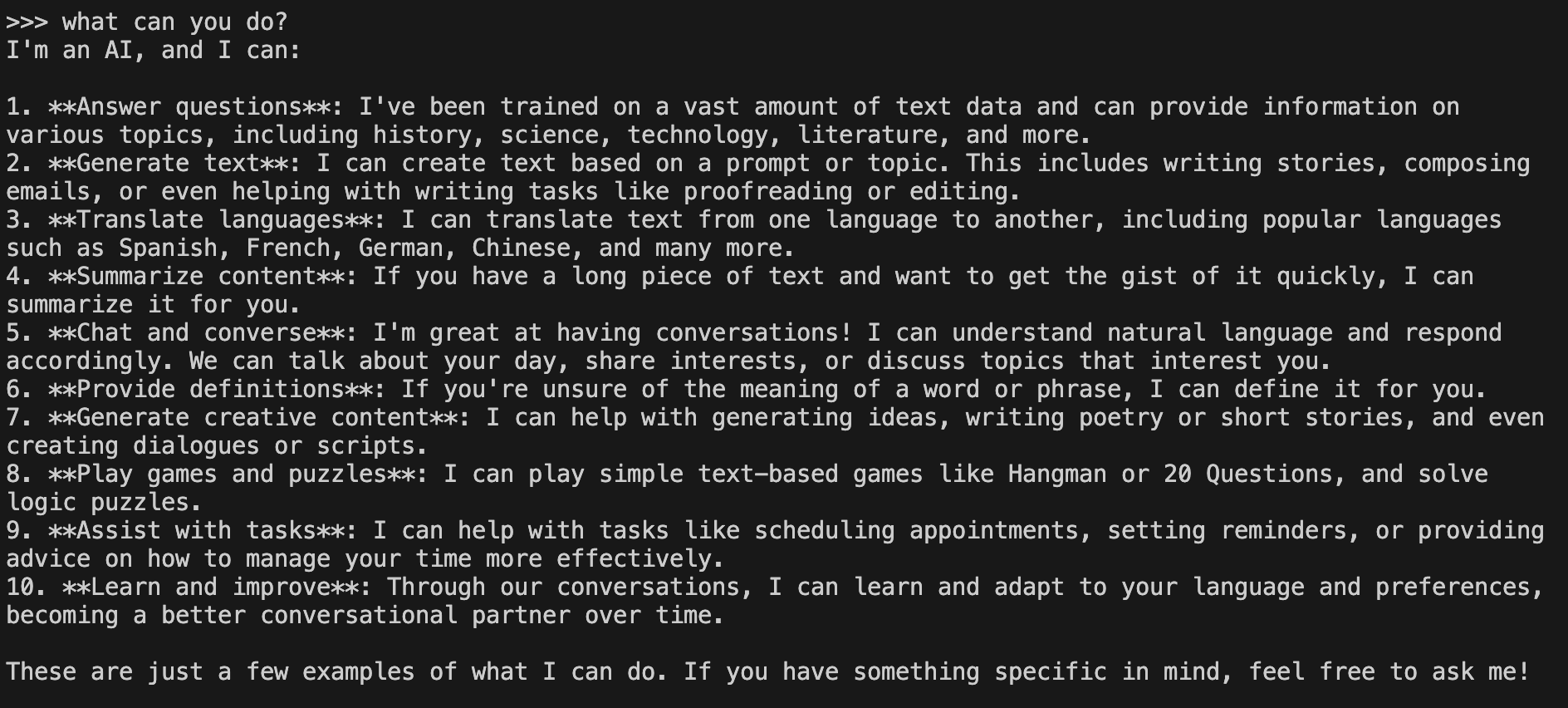

Now you can ask the model questions in the terminal:

It is amazing that fully functional LLM can fit into 4GB of disk space and generate answers on any topic. I have turned the Wi-Fi off to prove that it is not a trick ;-)

Model can be also consumed programmatically via API or with SDKs.

Downloading the models via llama-stack

In case you have started the journey like me, by downloading the models as explained here, it turns out to be not needed when using ollama.

ollama downloads its own copy of the model to ~/.ollama/models. If you have downloaded the model also with llama-stack CLI, you can delete it from ~/.llama/checkpoints/ to save disk space.